It recognizes that questions go up at the end. Still, in some ways it's amazing that it recognizes "really" as something that should go up. It's trying to match how we would speak - rising for "really" in the first sentence and rising for the question-ending in both questions. If you want to start thinking about the problem more, try inputting these two statements into Inova "Do you really want to see all of it? Do you want to see all of it? I want to see all of it." Notice how it tries to rise around "really" in the first sentence. You have to deal with breaks in utterances.Īnd the fact is that we can understand Hawking's 1980s TTS. You have to deal with how long phonemes are going to be. You have to deal with things like F0 declination.

It isn't going to be perfect in many cases. When you're going through a finite amount of recorded speech, you have to choose something that fits.

Google and Nuance have good systems as well, but there's a balance between resources and perfect speech. How many banks have online banking that seems like it's from the 90s? You can't compare what systems can do to what some call center has installed as its technology. Many systems still sound pasted together because that's how enterprise technology goes. Modern systems usually involve many hours of speech from a single person and use variable length units to form more natural speech. Funding went to other problems in computational linguistics (like speech recognition, information extraction, etc.) and so did a lot of the workforce. Early TTS research was supported by the US government which saw that early systems were comprehensible (if not wonderful sounding) and declared victory. Part of this is that going from nothing to something reasonable happened exceedingly quickly. However, I can definitely understand how TTS technology looks stagnant. If you compare Google Maps voice to Stephen Hawking, it's night and day. TTS has definitely evolved over the years. Mark Gales Cambridge does work here, too. Simon King Edinburgh, just had a paper linked to on HN a few days ago and does important work. Interspeech is an important conference (take a look at the speech synthesis track and the organizers for that). Festival is an important piece of software. I apologize to all of the important and interesting work that I'm missing.Īlso, I'm really linking to research groups, so take these names as starting points and look at their students and other professors working with them.įirst off, the Blizzard challenge is a major hub of activity. Disclaimer, this isn't my area and I'm going to miss some folks. Perhaps a Web service that converted text to speech would be one option, but it would have limited applicability.Įdit: Perhaps a Kickstarter or related would be a good idea since this type of feature would be useful by so many people. This fact, however, is enough to deter research in this field. In fact, I believe that any standard algorithm should be open. This is not to say I don't like open algorithms. Basically every time a good algorithm even starts to gain traction, an open "alternative" is made available practically overnight. I mean just look at the history of digital audio (or video) and encoding, with things like Xvid and Ogg. Perhaps the fear is that the algorithm would be pirated. The real question is the algorithms being used.

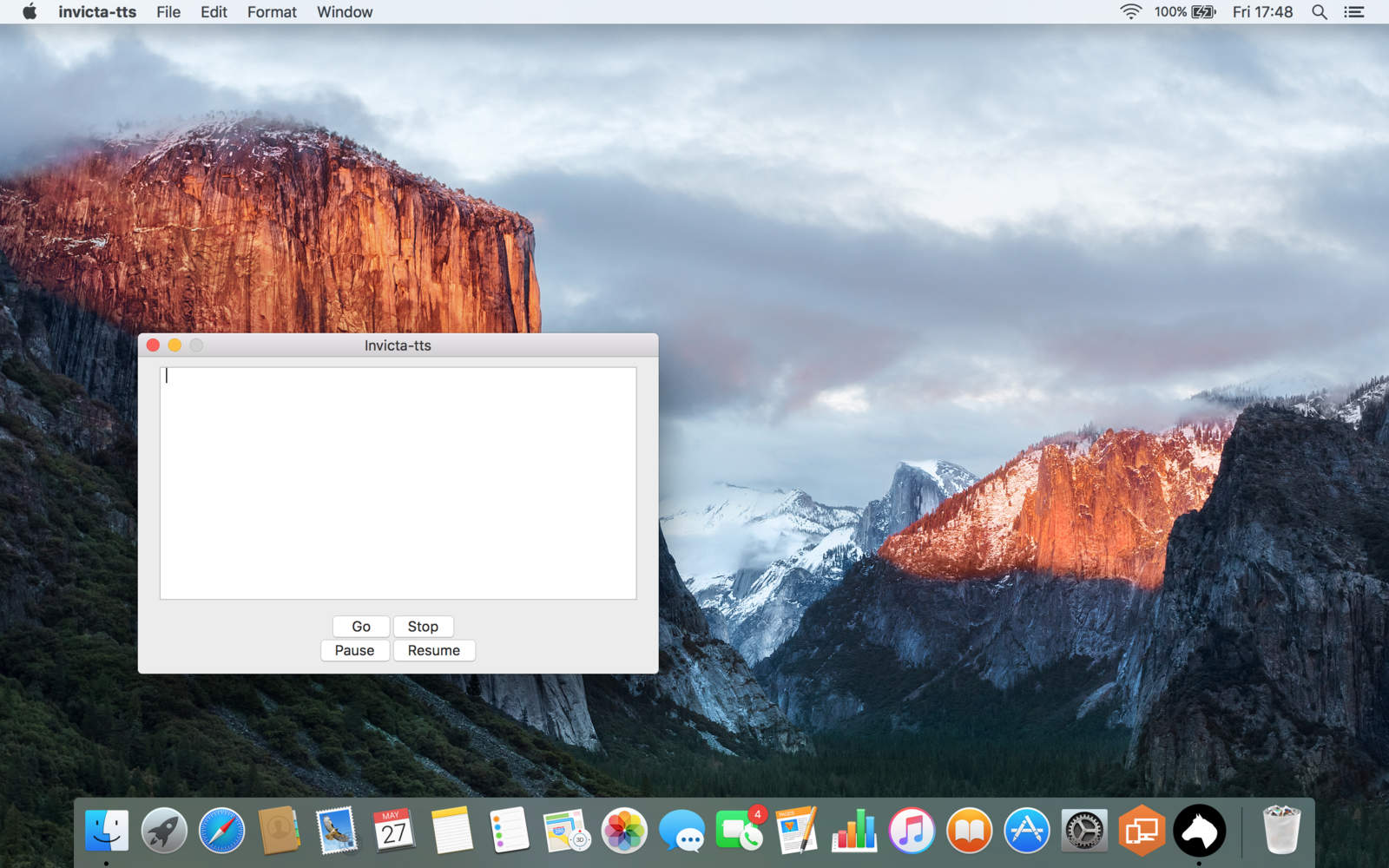

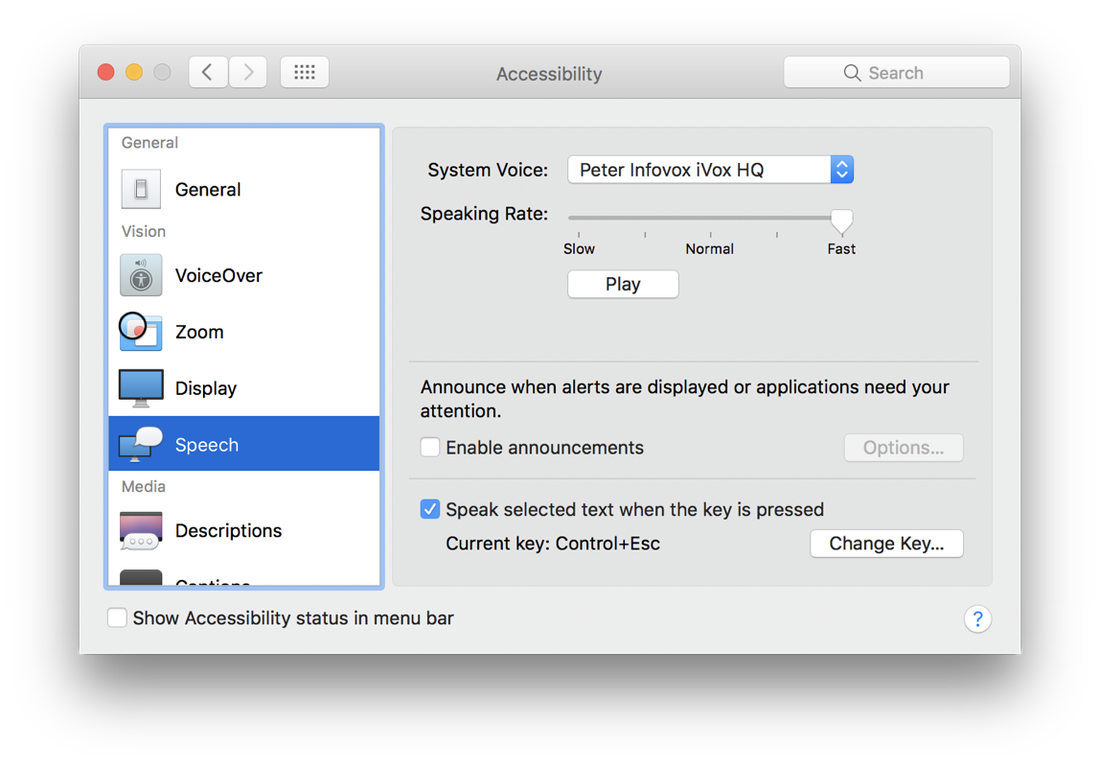

Mac os x tts Pc#

As far as CPU power, today's average PC is easily 5x what's necessary for perfect speech. I've thought about getting into this area myself, but I was too afraid there was not enough market for it.

0 kommentar(er)

0 kommentar(er)